Image Annotation in Computer Vision:

Computer vision is one of the most important areas of machine learning and AI research. To be precise, computer vision research aims to enable computers to visually perceive and comprehend the environment. Computer vision applications are numerous and ground-breaking, ranging from crewless aerial vehicles and drones to facial recognition software and medical diagnosis technology.

Since computer vision aims to create machines that can duplicate or outperform human vision, training such models necessitates a vast collection of annotated images.

The process of adding labels or other information to a data collection is known as data annotation. Machine learning algorithms are frequently trained using labeled datasets. Most computer vision models require many annotated images or videos to learn patterns.

Even when done manually, data annotation can be a time-consuming operation. By offering features like auto labeling, smart polygon selection, and monitoring tagged objects from frame to frame, a rise in AI-powered labeling tools is altering how data annotation is done.

Types of Images annotation used in Machine learning:

To teach computers to recognize different objects, annotated images are crucial to machine learning. Using a unique technique, image annotations highlight and label a specific object.

According to the needs and kinds of images, many different annotation techniques are used to annotate images. Here, we compiled a list of the most widely used picture annotation methods and discussed them.

Bounding box Annotation:

Bounding boxes are one of the most often used types of image annotation in computer vision due to its versatility and simplicity. Bound boxes surround objects and aid in the computer vision network's ability to discover intriguing objects. Because you just need to input the X and Y coordinates for the box's upper left and bottom right corners, they are easy to create.Virtually every potential object can be detected using the bounding box, considerably improving the accuracy of an object detection system.

Bounding boxes are used in computer vision image annotation to help networks locate objects. In models, bounding boxes are helpful for identifying and locating objects. When checking for collisions between objects, bounding boxes are typically used.

The use of bounding boxes and object detection in autonomous driving is obvious. Autonomous driving systems may tag construction site objects in addition to locating vehicles on the road in order to analyse site safety and provide robots the ability to recognise objects in diverse settings.

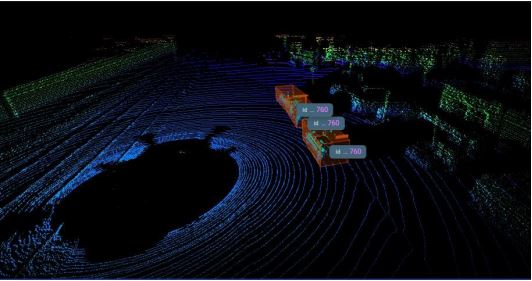

3D Cuboid Annotation

Like bounding boxes, 3D cuboid annotation requests annotators to outline

certain portions of

an image. Bounding boxes merely showed length and breadth, whereas 3D

cuboids include

labels for length, width, and a rough representation of depth. Human

annotators use 3D

cuboid annotation to create a box around the object of interest and set

anchor points at each

object's edges. The annotator makes an educated guess as to where the edge

would be

based on the image's size, height, and angle if one of the item's edges is

obscured or

blocked by another object.

When a computer vision system must identify not only an object but also

anticipate its overall

shape and volume, 3D cuboids are used. When creating a computer vision

system for an

autonomous system that can move around and make predictions about things in

its

environment, 3D cuboids are most typically used. Creating vision systems for

autonomous

vehicles and locomotive robots are examples of applications for 3D cuboids

in computer

vision.

Landmark Annotation

Used as dot annotation, it is mainly useful to recognize the shapes' dissimilarities and count the microscopic items. It is primarily used to find objects in far-off places like satellite photos. However, it can also identify athletes' faces and facial traits by detecting various stances taken by athletes or sports players. However, it is also used for computer vision in the development of self-driving vehicles and other autonomous vehicle types to predict the movements of pedestrians.

Line Annotation:

The process of line annotation entails the construction of lines and

splines, typically used to

indicate where one area of a picture ends, and another begins. A line

annotation is

employed when a region needs to be annotated and can be thought of as a

border but is too

small or thin for a bounding box or another sort of annotation to make

sense.

Splines and lines are simple to annotate and are frequently used in

situations like teaching

warehouse robots to distinguish different conveyor belt components or

teaching autonomous

vehicles to recognize lanes.

Polygon Annotation:

It is one of the quickest and most intelligent ways to annotate different kinds of things for machine learning. In this technique of picture annotation, the borders of an object in the frame are accurately drawn to the highest degree, assisting in the identification of the object's proper size and shape. In sports analytics, this kind of picture annotation approach recognizes numerous items in greater detail, such as street signs, logos, and face features.

Semantic segmentation:

Compared to the instances given before it, which focus on detecting an

object's boundaries

or outer edges, semantic segmentation is far more accurate and thorough.

Each pixel in a

complete image is tagged as part of the semantic segmentation process.

Annotators are

frequently provided a list of predetermined tags from which they must tag

each element on

the page for projects needing semantic segmentation. Annotators would draw

lines around a

group of pixels they intended to tag on systems that are comparable to those

for polygonal

annotation. Platforms with AI assistance can also be used for this. For

instance, the

programme might roughly segment the edges of a car but might inadvertently

segment the

shadows beneath the car. Similar situations would require human annotators

to clip using a

different tool.

Semantic segmentation is also widely employed in the manufacturing of

medical imaging

equipment. Annotators are asked to name each body part with the proper name

for anatomy

and body part labelling after receiving a photograph of a person. Semantic

segmentation can

also be used for exceedingly precise tasks like recognising brain lesions in

CT scan images.

The conclusion:

The easiest thing to do is an experiment by implementing them and observing which annotation techniques perform best for your application now that you are more familiar with the various types of image annotation and potential use cases for them. Regardless of the use case or type of image annotation, having a partner you can rely on to launch your next machine learning project is quite valuable. Contact our Howetechworks team to get going right now